CNTI’S SUMMARY

Developments in AI carry new legal and ethical challenges for how news organizations use AI in production and distribution as well as how AI systems use news content to learn. For newsrooms, the use of generative AI tools offers benefits for productivity and innovation. At the same time, it risks inaccuracies, ethical issues and undermining public trust. It also presents opportunities for abuse of copyright for journalists’ original work. To address these challenges, legislation will need to offer clear definitions of AI categories and specific disclosures for each. It must also grapple with the repercussions of AI-generated content for (1) copyright or terms-of-service violations and (2) people’s civil liberties that, in practice, will likely be hard to identify and enforce through policy. Publishers and technology companies will also be responsible for establishing transparent, ethical guidelines for and education on these practices. Forward-thinking collaboration among policymakers, publishers, technology developers and academics is critical.

The Issue

Early forms of artificial intelligence (prior to the development of generative AI) have been used for years to both create and distribute online news and information. Larger newsrooms have leveraged automation for years to streamline production and routine tasks, from generating earnings reports and sports recaps to producing tags and transcriptions. While these practices have been far less common in local and smaller newsrooms, they are being adopted more. Technology companies also increasingly use AI to automate critical tasks related to news and information, such as recommending and moderating content as well as generating search results and summaries.

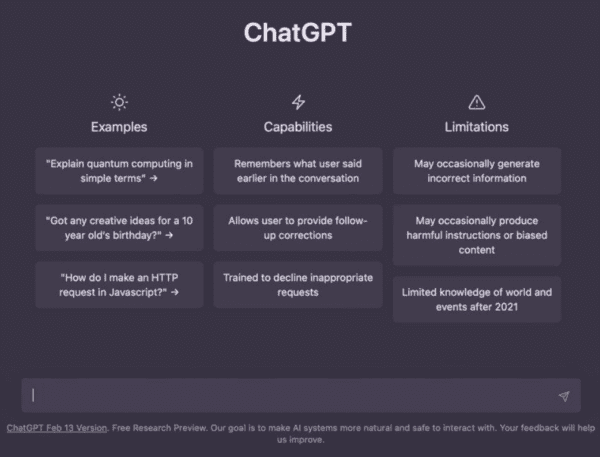

Until now, public debates around the rise of artificial intelligence have largely focused on its potential to disrupt manual labor and operational work such as food service or manufacturing, with the assumption that creative work would be far less affected. However, a recent wave of accessible – and far more sophisticated – “generative AI” systems such as DALL-E, Lensa AI, Stable Diffusion, ChatGPT, Poe and Bard have raised concerns about their potential for destabilizing white-collar jobs and media work, abusing copyright (both against and by newsrooms), giving the public inaccurate information and eroding trust. At the same time, these technologies also create new pathways for sustainability and innovation in news production, ranging from generating summaries or newsletters and covering local events (with mixed results) to pitching stories and moderating comments sections.

As newsrooms experiment with new uses of generative AI, some of their practices have been criticized for errors and a lack of transparency. News publishers themselves are claiming copyright and terms-of-service violations by those using news content to build and train new AI tools (and, in some cases, striking deals with tech companies or blocking web crawler access to their content), while also grappling with the potential of generative AI tools to further shift search engine traffic away from news content.

These developments introduce novel legal and ethical challenges for journalists, creators, policymakers and social media platforms. This includes how publishers use AI in news production and distribution, how AI systems draw from news content and how AI policy around the world will shape both. CNTI will address each of these, with a particular focus on areas that require legislation as well as those that should be a part of ethical journalism practice and policy. Copyright challenges emerging from artificial intelligence are addressed here, though CNTI also offers a separate issue primer focusing on issues of copyright more broadly.

What Makes it Complex

In considering legislation, it is unclear how to determine which AI news practices would fall within legal parameters, how to categorize those that do and how news practices differ from other AI uses.

What specific practices and types of content would be subject to legislative policy? How would it apply to other types of AI? What comprises “artificial intelligence” is often contested and difficult to define depending on how broad the scope is (e.g., if it includes or excludes classical algorithms) and whether one uses technical or “human-based” language (e.g., “machine learning” vs. “AI”). New questions have emerged around the umbrella term of “generative AI” systems, most prominently Large Language Models (LLMs), further complicating these distinctions. These questions will need to be addressed in legislation in ways that protect citizens’ and creators’ fundamental rights and safety. At the same time, policymakers must consider the future risks of each system, as these technologies will continue to evolve at a faster pace than policy can reasonably keep up with.

The quantity and type of data collected by generative AI programs introduce new privacy and copyright concerns.

Among these concerns is what data is collected and how it is used by AI tools, in terms of both the input (the online data scraped to train these tools) and the output (the automated content itself). For example, Stable Diffusion was originally trained on 2.3 billion captioned images, including copyrighted works as well as images from artists, Pinterest and stock image sites. Additionally, news publishers have questioned whether their articles are being used to train AI tools without authorization, potentially violating terms-of-service agreements. In response, some technology companies have begun discussing agreements to pay publishers for use of their content to train generative AI models. This issue raises a central question about what in our digital world should qualify as a derivative piece of content, thereby tying it to copyright rules. Further, does new AI-generated content deserve its own copyright? If so, who gets the copyright: the developers who built the algorithm or the entity that published the content?

The copyright challenges of AI (addressed in more detail in our copyright issue primer) also present ethical dilemmas involved in profiting off of the output of AI models trained on copyrighted creative work without attribution or compensation.

Establishing transparency and disclosure standards for AI practices requires a coordinated approach between legal and organizational policies.

While some areas of transparency may make sense to be addressed through legal requirements (like current advertising disclosures), others will be more appropriate for technology companies and publishers to take on themselves. This means establishing their own principles, guidelines and policies for navigating the use of AI within their organizations – ranging from appropriate application to labeling to image manipulation. But these will need to both fit alongside any legal requirements and also be similar enough across organizations for public understanding. Newsroom education will also be critical, as journalists themselves are often unsure of how, or to what extent, their organizations rely on AI. For technology companies specifically, there is ongoing debate over requirements of algorithmic transparency (addressed in more detail in our algorithmic accountability issue primer) and the degree to which legal demand for this transparency could enable bad actors to hack or otherwise take advantage of the system in harmful ways.

The use of generative AI tools to create news stories presents a series of challenges around providing fact-based information to the public; the question of how that factors into legal or organizational policies remains uncertain.

Generative AI tools have initially been shown to produce content riddled with factual errors. Not only do they include false or entirely made-up information, but they are also “confidently wrong,” creating convincingly high-quality content and offering authoritative arguments for inaccuracies. Distinguishing between legitimate and illegitimate content (or even satire) will, therefore, become increasingly difficult – particularly as counter-AI tools have so far been ineffective. Further, it is easy to produce AI-generated images or content and use it to manipulate search engine optimization results. This can be exploited by spammers who churn out AI-generated “news” content or antidemocratic actors who create scalable and potentially persuasive propaganda and misinformation. For instance, while news publishers such as Semafor have generated powerful AI animations of Ukraine war eyewitness accounts in the absence of original footage, the same technology was weaponized by hackers to create convincing “deepfakes” of Ukrainian President Volodymyr Zelenskyy telling citizens to lay down their arms. While it is clear automation offers many opportunities to improve news efficiency and innovation, it also risks further commoditizing and undermining public trust in news.

There are inherent biases in generative AI tools that content generators and policymakers need to be aware of and guard against.

Because these technologies are usually trained on massive swaths of data scraped from the internet, they tend to replicate existing social biases and inequities. For instance, Lensa AI – a photo-editing app that launched a viral AI-powered avatar feature – has been alleged to produce hypersexualized and racialized images. Experts have expressed similar concerns about DALL-E and Stable Diffusion, which employ neural networks to transform text into imagery, which could be used to amplify stereotypes and produce fodder for sexual harassment or misinformation. The highly lauded AI text generator ChatGPT has been shown to generate violent, racist and sexist content (e.g. that only white men would make good scientists). Further, both the application of AI systems to sociocultural contexts they weren’t developed for and the human work in places like Kenya to make generative AI output less toxic present ethical issues. Finally, while natural language processing (NLP) is rapidly improving, AI tools’ training in dominant languages worsens longstanding access barriers for those who speak marginalized languages around the world. Developers have announced efforts to reduce some of these biases, but the longstanding, embedded nature of these biases and global use of the tools will make mitigation challenging.

State of Research

Artificial intelligence is no longer a fringe technology. Research finds a majority of companies, particularly those based in emerging economies, report AI adoption as of 2021. Experts have begun to document the increasingly critical role of AI for news publishers and technology companies, both separately and in relation to each other. And there is mounting evidence that AI technologies are routinely used both in social platforms’ algorithms and in everyday news work, though the latter is often concentrated among larger and upmarket publishers who have the resources to invest in these practices.

There are limitations to what journalists and the public understand when it comes to AI. Research shows there are gaps between the pervasiveness of AI uses in news and journalists’ understandings of and attitudes toward these practices. Further, audience-focused research on AI in journalism has found that news users often cannot discern between AI-generated and human-generated content. They also perceive there to be less media bias and higher credibility for certain types of AI-generated news, despite ample evidence that AI tools can perpetuate social biases and enable the development of disinformation.

Much of the existing research on AI in journalism has been theoretical. Even when the work is evidence-based, it is often more qualitative than quantitative, which allows us to answer some important questions, but makes a representative assessment of the situation difficult. Theoretical work has focused on the changing role of AI in journalism practice, the central role of platform companies in shaping AI and the conditions of news work, and the implications for AI dependence on journalism’s value and its ability to fulfill its democratic aims. Work in the media policy space has largely concentrated around European Union policy debates and the role of transparency around AI news practices in enhancing trust.

Future work should prioritize evidence-based research on how AI reshapes the news people get to see – both directly from publishers and indirectly through platforms. AI research focused outside of the U.S. and outside economically developed countries would offer a fuller understanding of how technological changes affect news practices globally. On the policy side, comparative analyses of use cases would aid in developing transnational best practices in news transparency and disclosure around AI.

57%

of companies based in emerging economies reported AI adoption in 2021

(McKinsey, 2021)

67%

of media leaders in 53 countries say they use AI for story selection or recommendations to some extent

(Reuters Institue for the Study of Journalism, 2023)

Notable Studies

State of Legislation

The latest wave of AI innovation has, in most countries, far outpaced governmental oversight or regulation. Regulatory responses to emerging technologies like AI have ranged from direct regulation to soft law (e.g. guidelines) to industry self-regulation, and they vary by country. Some governments, such as Russia and China, directly or indirectly facilitate – and thus often control – the development of AI in their countries. Others attempt to facilitate innovation by involving various stakeholders. Some actively seek to regulate AI technology and protect citizens against its risks. For example, when it comes to privacy the EU’s legislation has placed heavy emphasis on robust protections of citizens’ data from commercial and state entities, while countries like China assume the state’s right to collect and use citizens’ data.

These differences reflect a lack of agreement over what values should underpin AI legislation or ethics frameworks and make global consensus over its regulation challenging. That said, legislation in one country can have important effects elsewhere. It is important that those proposing policy and other solutions recognize global differences and consider the full range of their potential impacts without compromising democratic values of an independent press, an open internet and free expression.

Legislative policies specifically intended to regulate AI can easily be weakened by a lack of clarity around what qualifies as AI, making violations incredibly hard to identify and enforce. Given the complexity of these systems and the speed of innovation in this field, experts have called for individualized and adaptive provisions rather than one-size-fits-all responses. Recommendations for broader stakeholder involvement in building AI legislation also include engaging groups (such as marginalized or vulnerable communities) that are often most impacted by its outcomes.

Finally, as the role of news content in the training of AI systems becomes an increasingly central part of regulatory and policy debates, responses to AI developments will likely need to account for the protection of an independent, competitive news media. Currently, this applies to policy debates about modernizing copyright and fair use provisions for digital content as well as collective bargaining codes and other forms of economic support between publishers and the companies that develop and commodify these technologies.

Notable Legislation

Resources & Events

Notable Articles & Statements

Can journalism survive AI?

Brookings Institution (March 2024)

RSF and 16 partners unveil Paris Charter on AI and Journalism

Reporters Without Borders (November 2023)

The legal framework for AI is being built in real time, and a ruling in the Sarah Silverman case should give publishers pause

Nieman Lab (November 2023)

These look like prizewinning photos. They’re AI fakes.

The Washington Post (November 2023)

How AI reduces the world to stereotypes

Rest of World (October 2023)

Standards around generative AI

Associated Press (August 2023)

The New York Times wants to go its own way on AI licensing

Nieman Lab (August 2023)

News firms seek transparency, collective negotiation over content use by AI makers – letter

Reuters (August 2023)

Automating democracy: Generative AI, journalism, and the future of democracy

Oxford Internet Institute (August 2023)

Outcry against AI companies grows over who controls internet’s content

Wall Street Journal (July 2023)

OpenAI will give local news millions to experiment with AI

Nieman Lab (July 2023)

Generative AI and journalism: A catalyst or a roadblock for African newsrooms?

Internews (May 2023)

Lost in translation: Large language models in non-English content analysis

Center for Democracy & Technology (May 2023)

AI will not revolutionise journalism, but it is far from a fad

Oxford Internet Institute (March 2023)

Section 230 won’t protect ChatGPT

Lawfare (February 2023)

Generative AI copyright concerns you must know in 2023

AI Multiple (January 2023)

ChatGPT can’t be credited as an author, says world’s largest academic publisher

The Verge (January 2023)

Guidelines for responsible content creation with generative AI

Contently (January 2023)

Governing artificial intelligence in the public interest

Stanford Cyber Policy Center (July 2022)

Initial white paper on the social, economic and political impact of media AI technologies

AI4Media (February 2021)

Toward an ethics of artificial intelligence

United Nations (2018)

Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms

Brookings Institution (May 2019)

Key Institutions & Resources

AIAAIC: Independent, public interest initiative that examines AI, algorithmic and automation transparency and openness.

AI Now Institute: Policy research institute studying the social implications of artificial intelligence and policy research.

Data & Society & Algorithmic Impact Methods Lab: Data & Society lab advancing assessments of AI systems in the public interest.

Digital Policy Alert Activity Tracker: Tracks developments in legislatures, judiciaries and the executive branches of G20, EU member states and Switzerland.

Global Partnership on Artificial Intelligence (GPAI): International initiative aiming to advance the responsible development of AI.

Electronic Frontier Foundation (EFF): Non-profit organization aiming to protect digital privacy, free speech and innovation, including for AI.

Institute for the Future of Work (IFOW): Independent research institute tracking international legislation relevant to AI in the workplace.

JournalismAI Project & Global Case Studies: Global initiative empowering newsrooms to use AI responsibly and offering practical guides for AI in journalism.

Local News AI Initiative: Knight Foundation/Associated Press initiative advancing AI in local newsrooms.

MIT Media Lab: Interdisciplinary AI research lab.

Nesta AI Governance Database: Inventory of global governance activities related to AI (up to 2020).

OECD.AI Policy Observatory: Repository of over 800 AI policy initiatives from 69 countries, territories and the EU.

Organized Crime & Corruption Reporting Project: Investigative reporting platform for a worldwide network of independent media centers and journalists.

Partnership on Artificial Intelligence: Non-profit organization offering resources and convenings to address ethical AI issues.

Research ICT Africa & Africa AI Policy Project (AI4D): Mapping AI use in Africa and associated governance issues affecting the African continent.

Stanford University AI Index Report: Independent initiative tracking data related to artificial intelligence.

Term Tabs: A digital tool for searching and comparing definitions of (U.S./English language) technology-related terms in social media legislation.

Tortoise Global AI Index: Ranks countries based on capacity for artificial intelligence by measuring levels of investment, innovation and implementation.

Notable Voices

Rachel Adams, CEO & Founder, Global Center on AI Governance

Pekka Ala-Pietilä, Chair, European Commission High-Level Expert Group on Artificial Intelligence

Norberto Andrade, Founder, XGO Strategies

Chinmayi Arun, Executive Director, Information Society Project

Charlie Beckett, Director, JournalismAI Project

Meredith Broussard, Research Director, NYU Alliance for Public Interest Technology

Pedro Burgos, Knight Fellow, International Center for Journalists

Jack Clark, Co-Founder, Anthropic

Kate Crawford, Research Professor, USC Annenberg

Renée Cummings, Assistant Professor, University of Virginia

Claes de Vreese, Research Leader, AI, Media and Democracy Lab

Timnit Gebru, Founder and Executive Director, The Distributed AI Research Institute (DAIR)

Natali Helberger, Director, AI, Media and Democracy Lab

Aurelie Jean, Founder, In Silico Veritas

Francesco Marconi, Co-Founder, AppliedXL

Surya Mattu, Lead, Digital Witness Lab

Madhumita Murgia, AI Editor, Financial Times

Felix Simon, Fellow, Reuters Institute

Edson Tandoc Jr., Associate Professor, Nanyang Technological University

Scott Timcke, Senior Research Associate, Research ICT Africa

Recent & Upcoming Events

Abraji International Congress of Investigative Journalism

June 29–July 2, 2023 – São Paulo, Brazil

Association for the Advancement of Artificial Intelligence 2023 Conference

February 7–14, 2023 – Washington, DC

ACM CHI Conference on Human Factors in Computing Systems

April 23–28, 2023 – Hamburg, Germany

International Conference on Learning Representations

May 1–5, 2023 – Kigali, Rwanda

2023 IPI World Congress: New Frontiers in the Age of AI

May 25–26, 2023 – Vienna, Austria

RightsCon

June 5–8, 2023 – San José, Costa Rica

RegHorizon AI Policy Summit 2023

November 3–4, 2023 – Zurich, Switzerland

Can journalism survive AI?

Brookings Institution (March 2024)

RSF and 16 partners unveil Paris Charter on AI and Journalism

Reporters Without Borders (November 2023)

The legal framework for AI is being built in real time, and a ruling in the Sarah Silverman case should give publishers pause

Nieman Lab (November 2023)

These look like prizewinning photos. They’re AI fakes.

The Washington Post (November 2023)

How AI reduces the world to stereotypes

Rest of World (October 2023)

Standards around generative AI

Associated Press (August 2023)

The New York Times wants to go its own way on AI licensing

Nieman Lab (August 2023)

News firms seek transparency, collective negotiation over content use by AI makers – letter

Reuters (August 2023)

Automating democracy: Generative AI, journalism, and the future of democracy

Oxford Internet Institute (August 2023)

Outcry against AI companies grows over who controls internet’s content

Wall Street Journal (July 2023)

OpenAI will give local news millions to experiment with AI

Nieman Lab (July 2023)

Generative AI and journalism: A catalyst or a roadblock for African newsrooms?

Internews (May 2023)

Lost in translation: Large language models in non-English content analysis

Center for Democracy & Technology (May 2023)

AI will not revolutionise journalism, but it is far from a fad

Oxford Internet Institute (March 2023)

Section 230 won’t protect ChatGPT

Lawfare (February 2023)

Generative AI copyright concerns you must know in 2023

AI Multiple (January 2023)

ChatGPT can’t be credited as an author, says world’s largest academic publisher

The Verge (January 2023)

Guidelines for responsible content creation with generative AI

Contently (January 2023)

Governing artificial intelligence in the public interest

Stanford Cyber Policy Center (July 2022)

Initial white paper on the social, economic and political impact of media AI technologies

AI4Media (February 2021)

Toward an ethics of artificial intelligence

United Nations (2018)

Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms

Brookings Institute (May 2019)

AIAAIC: Independent, public interest initiative that examines AI, algorithmic and automation transparency and openness.

AI Now Institute: Policy research institute studying the social implications of artificial intelligence and policy research.

Data & Society & Algorithmic Impact Methods Lab: Data & Society lab advancing assessments of AI systems in the public interest.

Digital Policy Alert Activity Tracker: Tracks developments in legislatures, judiciaries and the executive branches of G20, EU member states and Switzerland.

Global Partnership on Artificial Intelligence (GPAI): International initiative aiming to advance the responsible development of AI.

Electronic Frontier Foundation (EFF): Non-profit organization aiming to protect digital privacy, free speech and innovation, including for AI.

Institute for the Future of Work (IFOW): Independent research institute tracking international legislation relevant to AI in the workplace.

JournalismAI Project & Global Case Studies: Global initiative empowering newsrooms to use AI responsibly and offering practical guides for AI in journalism.

Local News AI Initiative: Knight Foundation/Associated Press initiative advancing AI in local newsrooms.

MIT Media Lab: Interdisciplinary AI research lab.

Nesta AI Governance Database: Inventory of global governance activities related to AI (up to 2020).

OECD.AI Policy Observatory: Repository of over 800 AI policy initiatives from 69 countries, territories and the EU.

Organized Crime & Corruption Reporting Project: Investigative reporting platform for a worldwide network of independent media centers and journalists.

Partnership on Artificial Intelligence: Non-profit organization offering resources and convenings to address ethical AI issues.

Research ICT Africa & Africa AI Policy Project (AI4D): Mapping AI use in Africa and associated governance issues affecting the African continent.

Stanford University AI Index Report: Independent initiative tracking data related to artificial intelligence.

Term Tabs: A digital tool for searching and comparing definitions of (U.S./English language) technology-related terms in social media legislation.

Tortoise Global AI Index: Ranks countries based on capacity for artificial intelligence by measuring levels of investment, innovation and implementation.

Rachel Adams, CEO & Founder, Global Center on AI Governance

Pekka Ala-Pietilä, Chair, European Commission High-Level Expert Group on Artificial Intelligence

Norberto Andrade, Founder, XGO Strategies

Chinmayi Arun, Executive Director, Information Society Project

Charlie Beckett, Director, JournalismAI Project

Meredith Broussard, Research Director, NYU Alliance for Public Interest Technology

Pedro Burgos, Knight Fellow, International Center for Journalists

Jack Clark, Co-Founder, Anthropic

Kate Crawford, Research Professor, USC Annenberg

Renée Cummings, Assistant Professor, University of Virginia

Claes de Vreese, Research Leader, AI, Media and Democracy Lab

Timnit Gebru, Founder and Executive Director, The Distributed AI Research Institute (DAIR)

Natali Helberger,Director, AI, Media and Democracy Lab

Aurelie Jean, Founder, In Silico Veritas

Francesco Marconi, Co-Founder, AppliedXL

Surya Mattu, Lead, Digital Witness Lab

Madhumita Murgia, AI Editor, Financial Times

Felix Simon, Fellow, Reuters Institute

Edson Tandoc Jr., Associate Professor, Nanyang Technological University

Scott Timcke, Senior Research Associate, Research ICT Africa

Annual IDeaS Conference: Disinformation, Hate Speech, and Extremism Online

IDeaS

April 13-14, 2023 – Pittsburgh, Pennsylvania, USA

RightsCon

Access Now

June 5–8, 2023 – San José, Costa Rica

Abraji International Congress of Investigative Journalism

Brazilian Association of Investigative Journalism (Abraji)

June 29–July 2, 2023 – São Paulo, Brazil

Cambridge Disinformation Summit

University of Cambridge

July 27–28, 2023 – Cambridge, United Kingdom

EU DisinfoLab 2023 Annual Conference

EU DisinfoLab

October 11–12, 2023 – Krakow, Poland